10 Easy Ways To Instantly Index a Website in Google Search

Do you want more organic search traffic to your site? I’m willing to bet the answer is yes – we all do! and having a website is now an essential part of an online presence.

You can take the tortoise approach by just waiting for google search bots to pick up index a website in Google search, but it can take weeks and months.

Or you can make it happen now by putting a little energy for your blog and follow the below.

Disclosure: I may receive affiliate compensation for some of the links below at no cost to you if you decide to purchase a paid plan. You can read our affiliate disclosure in our privacy policy.

10 Easy Ways To index a website in Google search

- Create atleast 10 Quality content

- Interlink Contents

- Be Everywhere

- Install Sitemap

- Use Robots.txt

- Share on RSS Aggregators

- Check for crawl Errors

- Prevent Low quality content/pages from being indexed

- Solve other Burning problems

- Upgrade Old Contents

Create at least 10 Quality content

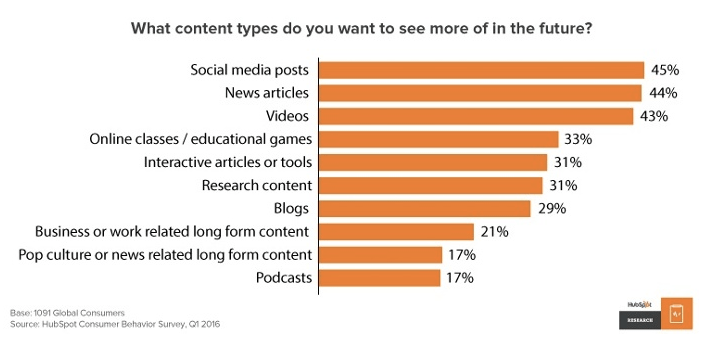

Before launching your website and turning it accessible for the public, you should at least write 10 quality contents which have been through proofreading, editing and SEO ready.

By doing this you will have quality contents at your disposal and you can focus on other important aspects rather than rushing around at the last minute.

Quality contents also make google search bot value your website and help it rank among your competitors.

Blog contents get crawled and indexed faster than static pages.

It has also been found that websites with blogs get an average of 434% more indexed pages and 97% more indexed links. Blogging works for every kin of business or niche.

Yes, writing blog is tiring and takes a lot of effort, but the rewards I have found are absolute, worth it.

And you don’t have to blog every day like a new channel unless you are a news channel.

Interlink Contents

This is the biggest mistake that far too many website owners make. They completely ignore interlinking their articles and thinks that related contents at the end of their articles will do all the rest.

Google also takes anchor text as a ranking factor and also allows the Google bot to scan deep links and articles.

Google bot has a budgeted time, so helping it to scan more contents on your website will be beneficial for you.

• Make it easy for spiders and bots by thorough link on your post/pages.

• Make sure your interlinking is logical and has rich anchor text.

It’s important to structure your website navigation in such a way that I make sense to your readers and google bots and all elements should be obviously related.

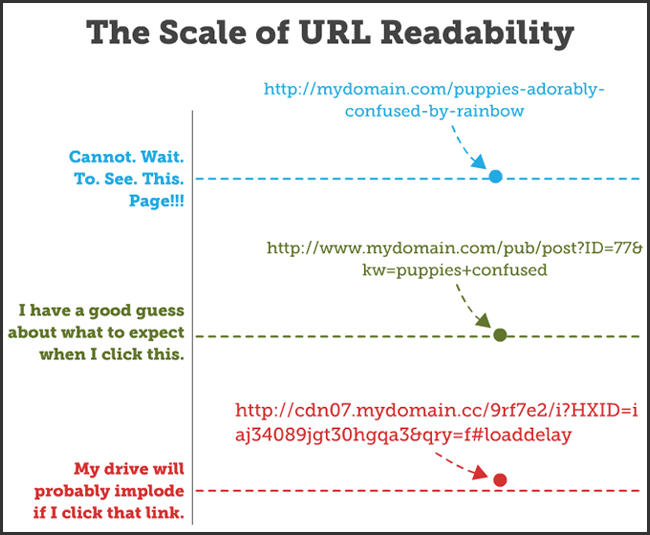

Not just that, interlinking also exposes your other valued contents to your audience and to use that advantage to its full potential, your blog should use a clean link structure.

The best way that I found to interlink a content in another content is to use it as a reference or let the audience know that you have a related article with it.

To Index a Website in Google Search, you can follow an example Like this.

To improve your Google ranking you can improve your Internal site link structure.

Or it can create a line link like this:

Related: How To Improve your Sites Internal Link Structure

Be EveryWhere

Social Sites get a lot of attention from search engines, this is a very effective method to get your site noticed to google bots and get your website crawled and index within hours.

Linkedin, Quora are some of the examples of social sites where you can submit your content.

A social site like this will also get you a good amount of traffic, as these websites are full of people seeking different contents.

Web Directories were created for two purposes.

First, to let people know what blogs are available on the niches they are interested and secondly to let search bots know about your site and crawl.

There are several web directories around the web and not all of them are good. You should do research on the good web directories available on your niches before submitting your website.

Note: Don’t use Link exchange Web Directories to Index a Website in Google Search.

But sharing your post on social media has lots of SEO benefit as Google said,

“Yes, we do use it as a signal. It is used as a signal in our organic and news rankings. We also use it to enhance our news universal by marking how many people shared an article.”

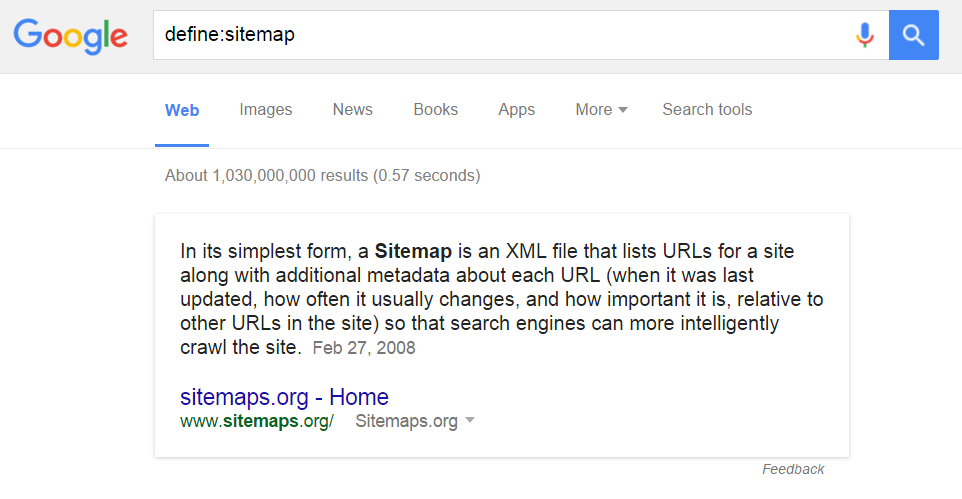

Install Sitemap

Ooh! I hope you know about sitemap and already have one.

If you don’t have a sitemap then How To Create WordPress Sitemap Using Google XML Sitemap Plugin will answer all your needs.

A sitemap is created so that the search engine bots and crawlers can effectively crawl and index your website.

There are several plugins for WordPress to create sitemaps like Yoast SEO, All In One SEO.

If you have a static website then search for tools to create XML sitemaps or use an XML Sitemap Generator.

Sitemaps will help your content get crawled and indexed in search results and can appear on top.

This can only happen if google search bots find your valuable contents and know which contents are valuable and should be indexed and which not.

You can easily generate an Xml sitemap by installing Google XML Sitemaps plugins.

Use Robots.txt

Robots.txt is a file that gives strict instructions to search engine bots about which pages they can crawl and index – and which pages to stay away from. Spiders read this file in your new domain before doing anything on your blog, in the absence of robots.txt the spider will assume to index and crawl all the pages.

Now you might wonder “Why on earth would I want search engines not to index a page on my site?” That’s a good question!

• How To Optimize WordPress Robots.Txt File For SEO

In short, you don’t want to index your admin pages or some confidential pages, like downloads. Every page that exists on your site should be counted as a separate page for search result purposes.

Note: It’s very important to use only a plain text editor, and not something like Word or WordPad. It may add some codes in the background which may conflict with the bots

WordPress bloggers can optimize their robots.txt files by using a reliable WordPress plugin like Yoast’s SEO plugin.

Share on RSS Aggregators

RSS stands for Really Simple Syndication or Rich Site Summary.

RSS will help you increase readership and conversion rate, but it can also help get your pages indexed and get discovered in search results.

It. Is an automated feed of your website that updates whenever a new post is published on your site.

It helps users consume a large amount of content and information in lesser time.

And allows users to subscribe to your RSS feed so that they can receive post notification automatically.

Setting up an RSS feed on your site is very easy, just watch the video framed below.

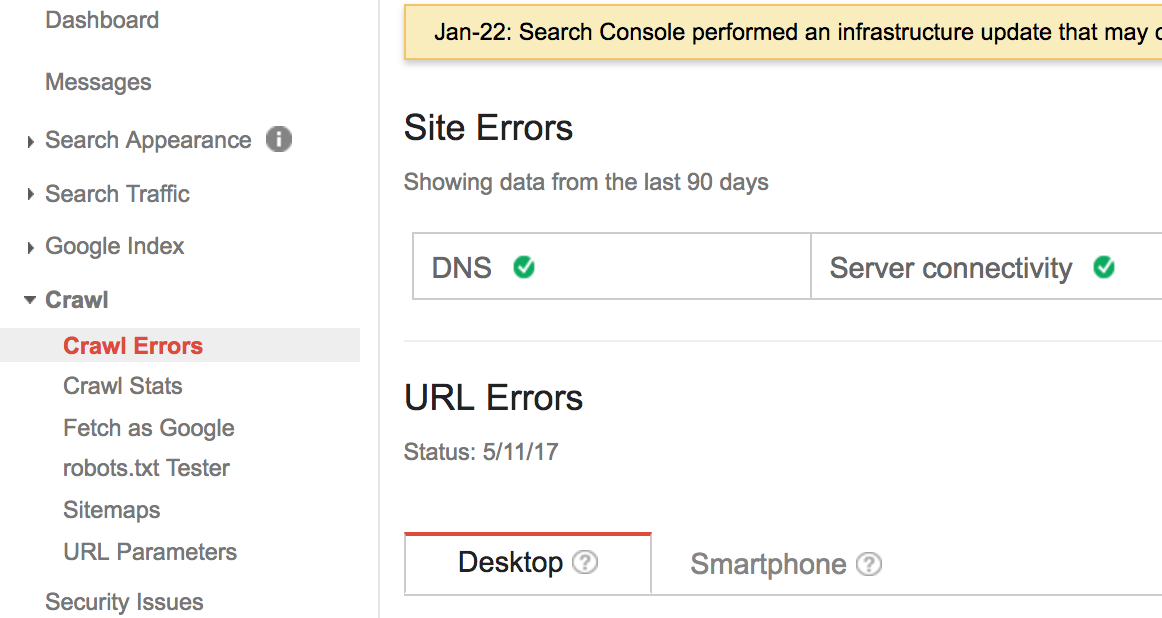

Check For Crawl Errors

Google hates 404 pages or contents that shows error. Crawl Errors provides you critical data about the crawl activities and errors it has generated due to some reasons.

If there is an increase in 404 error pages or due to some other reasons the pages are not getting crawled, you can view it in Google Search console.

Here’s what you should be monitoring at least once a month:

- Crawl errors

- Fetch as Google (this shows you what certain pages of your site look like to a search engine)

- Crawl stats

Prevent Low quality content/pages from being indexed

There are some pages you don’t want Google or other search engines to index.

Here are the pages that I recommend you should stop showing them up in search even if you think that it can help you Index a Website in Google Search:

Thank you pages: These are usually pages that someone lands on after signing up for your mailing list or downloading an ebook.

You don’t want people to skip the line and get right to the goods! If these pages get indexed, you could be losing out on leads filling out your form.

Duplicate content: If any pages on your site have duplicate content, or slightly varied, like a page you’re doing an A/B test for, you don’t want that to get indexed.

Archive Pages: WordPress automatically separates content based on year, month, date and category.

And you don’t need your archive content get crawled as it has the same information that your blog has and the bot will do the same task again.

Terms and Privacy Pages Why on earth you need your terms and condition pages, privacy policy or disclaimer pages get indexed.

If anyone who seeks for it can find a direct link in your website footer.

Solve Others Burning Problems

As of now, your blog is new and you hardly get any traffic.

But this can change if you can solve another problem by providing an answer to your blog.

I used Quora to solve other problem and provide a complete tutorial on my blog and would provide a short solution for the problems.

If any user who is interested in your answer will visit your blog which also increases your reach.

Answering on Quora or any other platform is a long term benefit and can drive you consistent high-quality traffic.

Upgrade Old Contents

The more you Update the more google crawls your website and the more you have chances to Index a Website in Google Search.

Updating old evergreen contents not only updates your content but also makes it better for your audience and Google loves fresh content on any topic.

Updating atlas 4 times a month increases the chances of faster indexing.

While updating, check for outdated facts or terms, outline to fresh information, interlink with related information and check for broken links.

And lastly, update the content to provide a better experience.

Conclusion

Make sure you’re updating your site frequently — not just with new content, but updating old posts too.

It keeps Google coming back to crawl your site frequently and keeps those posts relevant for new visitors.

Raking on page one and beating the big guys is possible, but it takes a lot of work and research to get there.

One last piece.

Write down how you monitor your indexing, analytics, and how you will update old information on your site.

It wouldn’t have been possible for me to grow as quickly as I did without a written plan.

What crawling and indexing methods have you used? What were your results?

Everything is very open with a very clear description of the issues. It was truly informative. Your site is very useful. Thank you for sharing!

Everything is very open with a very clear description of the issues. It was truly informative. Your site is very useful. Thank you for sharing!